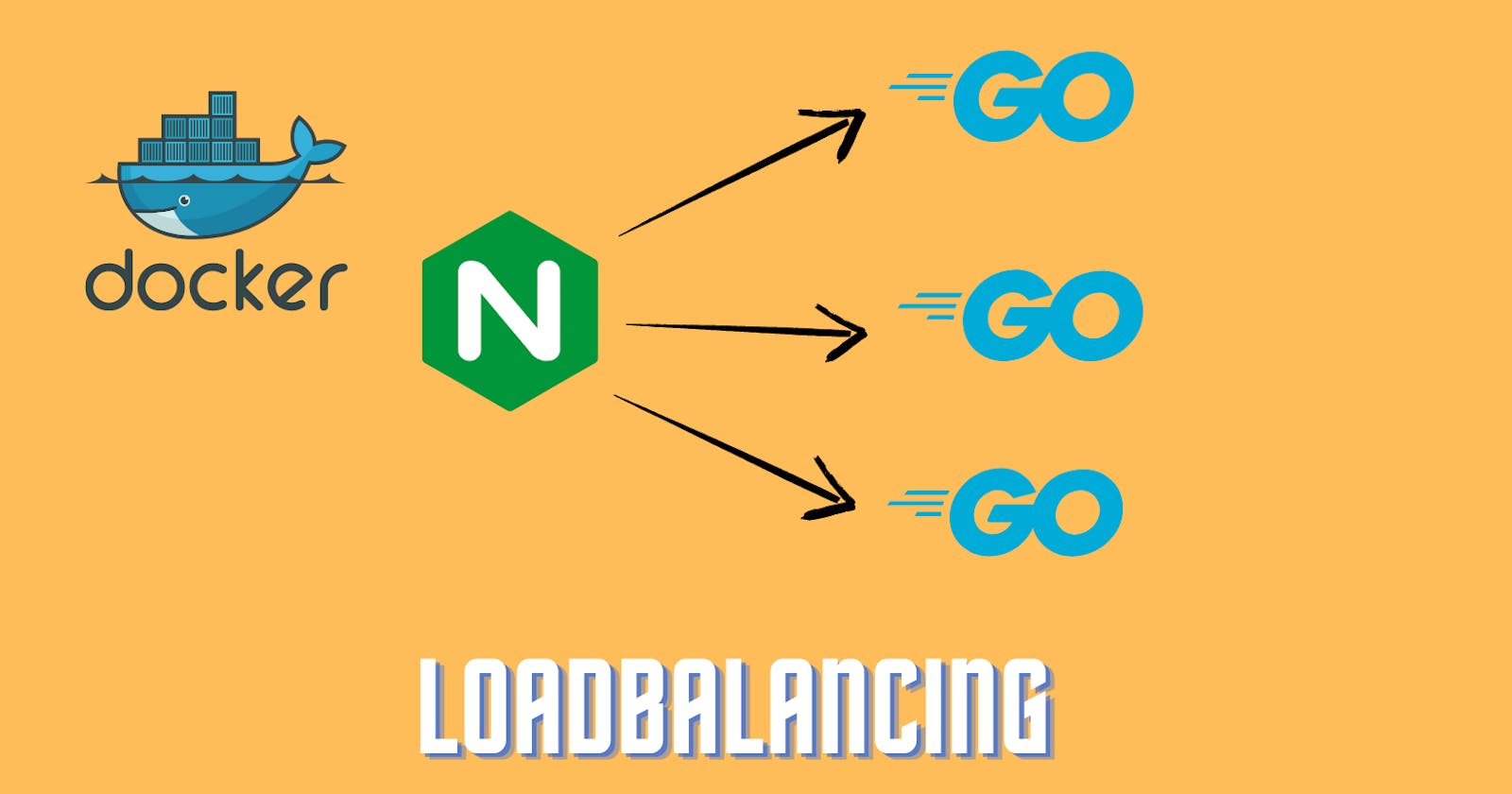

What is load balancing?

Let's imagine that we have a normal server and there are a lot of requests that overload our site. This is where load balancing comes in. Rather than managing all network traffic on a single server, this site distributes traffic evenly across a pool of servers to handle requests. This will prevent any single server from becoming overloaded.

Get Started with load balancing

Creating a service in GoLang

Firstly, I created a folder in the root directory called /golangService and then created a simple server in Golang. It basically listens for all the requests that are coming from port 5000 and serves. When a user goes to "localhost:5000/getResult" it will prompt a msg from "SERVERID" (env variable). SERVERID will define which server is handling requests at that time. If it's not clear don't worry follow along with the article. Here's the code -

package main

import (

"io"

"log"

"net/http"

"os"

)

func Handler(w http.ResponseWriter, r *http.Request) {

ServerID := os.Getenv("SERVERID")

io.WriteString(w, "msg from server"+ServerID)

}

func main() {

http.HandleFunc("/getResult", Handler)

if err := http.ListenAndServe(":5000", nil); err != nil {

log.Fatal(err)

}

}

Now We have to Dockerize our application and create a "dockerfile" under /golangService. I am not using a multi-stage build in docker for simplicity.

# /golangService/dockerfile

FROM golang:1.16-alpine

# defining current working directory

WORKDIR /app

# coping go.mod and go.sum into current working directory which is "/app"

COPY go.mod ./

COPY go.sum ./

# downloading dependencies

RUN go mod download

# coping all go files in current directory

COPY *.go ./

# building go project

RUN go build -o /main

# exposing server to port 5000

EXPOSE 5000

# running the go binary

CMD [ "/main" ]

NGINX Service Configuration

Next, I created nginx/nginx.conf . In this file, I have configured NGINX.

In Nginx, configurations are arranged into blocks which are known as directives. Nginx is a single master process that handles incoming requests using one or multiple worker processes. By default number of worker_processes is set to the number of CPU cores. But I have set this value by 4. In the events directive, I have configured worker_connections to 1024. It means each worker_process can handle up to 1024 simultaneous connections. Setting it to a high value can exhaust and decrease the performance. So we have to configure it depending on the available resource that we have. In the HTTP context, we include traffic handling. This defines all requests on " / " go to any of the servers listed under the upstream directive, with a preference for port 80.

worker_processes 4;

events { worker_connections 1024; }

http {

# Define the group of servers available

upstream app {

server server1:5000;

server server2:5000;

server server3:5000;

}

server {

# Server group will respond to port 80

listen 80;

location / {

proxy_pass http://app;

}

}

}

There are multiple ways to configure Nginx load-balancing methods to handle the traffic. We can configure it by changing the upstream directive.

Round_Robin (requests distributed evenly across the server considering server weight)

upstream app {

# weights not mandatory use it as per your usecase

server server1:5000 weight=3;

server server2:5000;

server server3:5000;

}

Least_Connections (the request will be sent on the server which has the least number of active connections considering server weight)

upstream backend {

# weights not mandatory use it as per your usecase

least_conn;

server server1:5000 weight=2;

server server2:5000;

server server3:5000;

}

IP_HASH (the request will be sent using the hash value. First three octets of the IPV4 address or the IPV6 address are used to calculate a hash value for the same address gets the same server)

upstream backend {

ip_hash;

server server1:5000;

server server2:5000;

server server3:5000;

}

GENERIC_HASH (forwards request from the user-defined key)

upstream backend {

hash $request_uri;

server server1:5000;

server server2:5000;

server server3:5000;

}

Least_Time (forwards the request determined from time to receive the response from the server)

upstream backend {

# header - time to receive first byte of the response from server

least_time header;

server server1:5000;

server server2:5000;

server server3:5000;

}

Random (randomly selects a server to forward the requests)

upstream backend {

# parameter two means first randomly selects two servers then chooses one the server from the rest using a specified method

# last_byte - time to receive the full response

random two least_time=last_byte;

server server1:5000;

server server2:5000;

server server3:5000;

}

Configuring Dockerfile for NGINX

After this, we need Dockerfile to build our NGINX service.

# nginx/dockerfile

FROM nginx

# removing default configuration by nginx

RUN rm /etc/nginx/conf.d/default.conf

# copy our configuration file to right path

COPY nginx.conf /etc/nginx/nginx.conf

Creating docker-compose file

At the root of the directory, I have created a docker-compose.yml. docker-compose helps to manage multi-container applications in docker. I have created 4 services first one is "nginx".That will run our NGINX server for load balancing. And the other 3 services are basically the same server that I have created in /golangService folder. By changing the SERVERID in the environment variables. I can get which server is running.

# docker-compose.yml

version: '3.4'

services:

nginx:

build:

context : ./nginx/

# service will run after completion of server1, server2, server3

depends_on:

- server1

- server2

- server3

ports:

- "80:80"

restart: always

server1:

build:

context: ./golangService

environment:

SERVERID: 1

restart: always

server2:

build:

context: ./golangService

environment:

SERVERID: 2

restart: always

server3:

build:

context: ./golangService

environment:

SERVERID: 3

restart: always

Running the Application

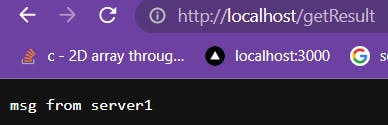

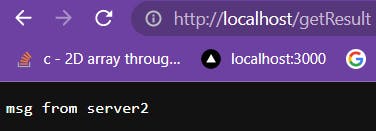

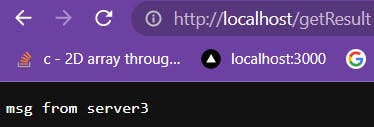

Now run this command in the terminal to create and run containers.

docker-compose up

Now open the browser and search " localhost:80/getResult " and it will return the response on the site, if you take a close look at it on every reload SERVERID is getting changed. (the sequence of changing the response will depend on the method that you are using in load balancing like round-robin, Ip-hash, least-connection, etc )

Now our server can handle the requests. You can configure your own server in another programming language.

Here's the GitHub link of this project -https://github.com/IRSHIT033/LoadBalancerDemo