What is Apache Kafka?

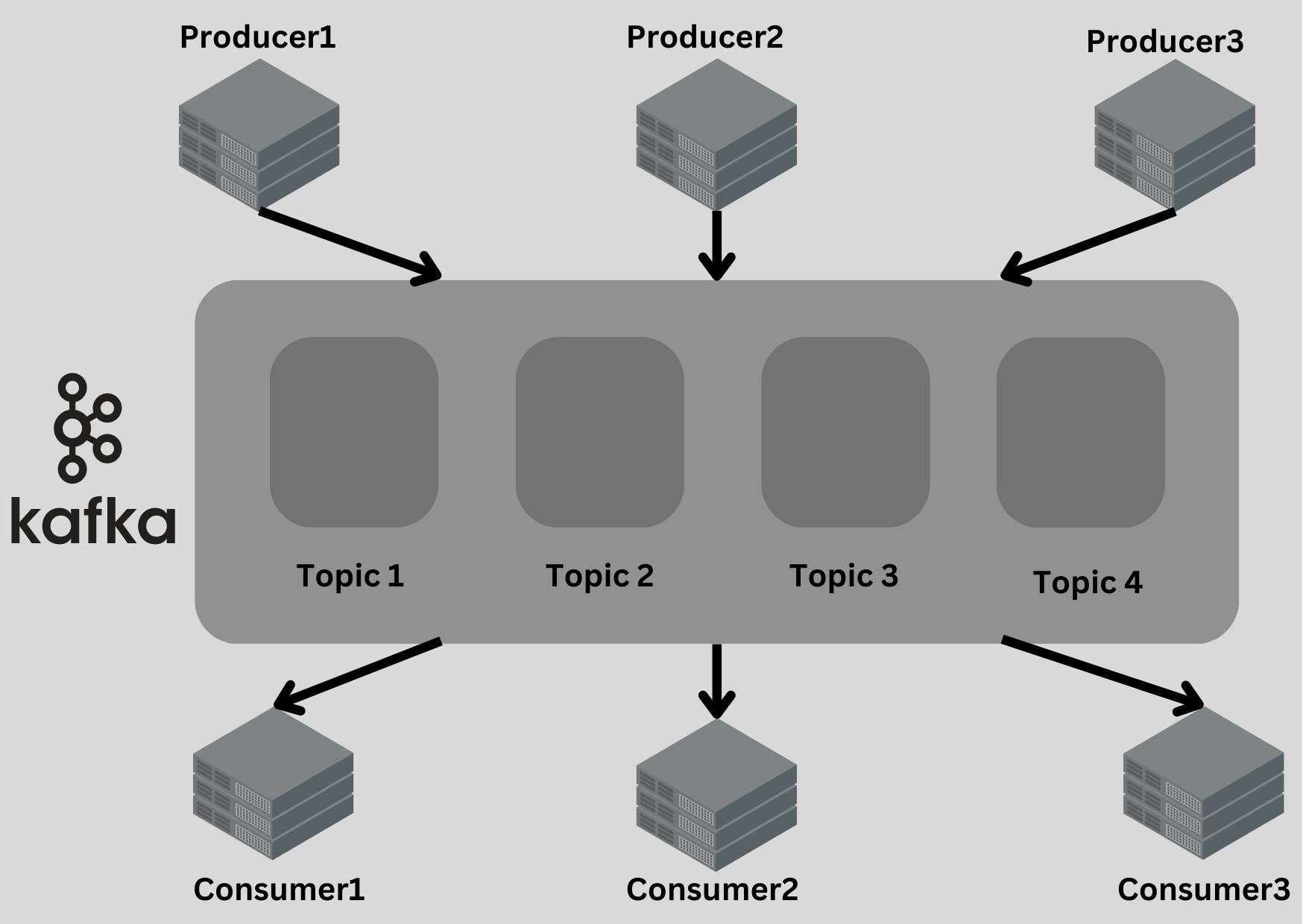

Apache Kafka is an open-source event-streaming platform. What is an event? event is a course of action in your application like a user ordering a product from its cart, sending an email, etc. Kafka is an excellent way to communicate between microservices. Kafka supports the pub/sub model and it's highly scalable. Apache Kafka provides high throughput and low latency.

Is Apache Kafka Message Queue?

No, Apache Kafka may seem similar to Message Queue but It's different. Kafka is basically a log of events called topics. In Kafka data is not deleted after consumption. But in the message queue once data is consumed it gets deleted. Also, Apache Kafka cannot scale consumers it can scale only with partitions in the topic(to understand continue reading).

What is Kafka's Topic?

Kafka topics are organized events or messages. For example, an application can have multiple logs this can be an example of a topic. The data is topics are stored in key-value pair in binary format. We cannot perform SQL queries in these logs. We need a producer to send data to the topic and a consumer to consume data from the topic.

Partitions in Topic?

For scaling Topics, Partition breaks the topic into multiple logs so we don't have to store entire logs in one node. Kafka guarantees the order of messages within a partition, but there is no ordering of messages across partitio1ns. If a message has no key then subsequent messages will be distributed in a round-robin fashion among all topic's partitions. In a real-life use case, if a customer's individual event is getting stored in a topic, then using customer-id as the event's key will help us retrieve the data in order.

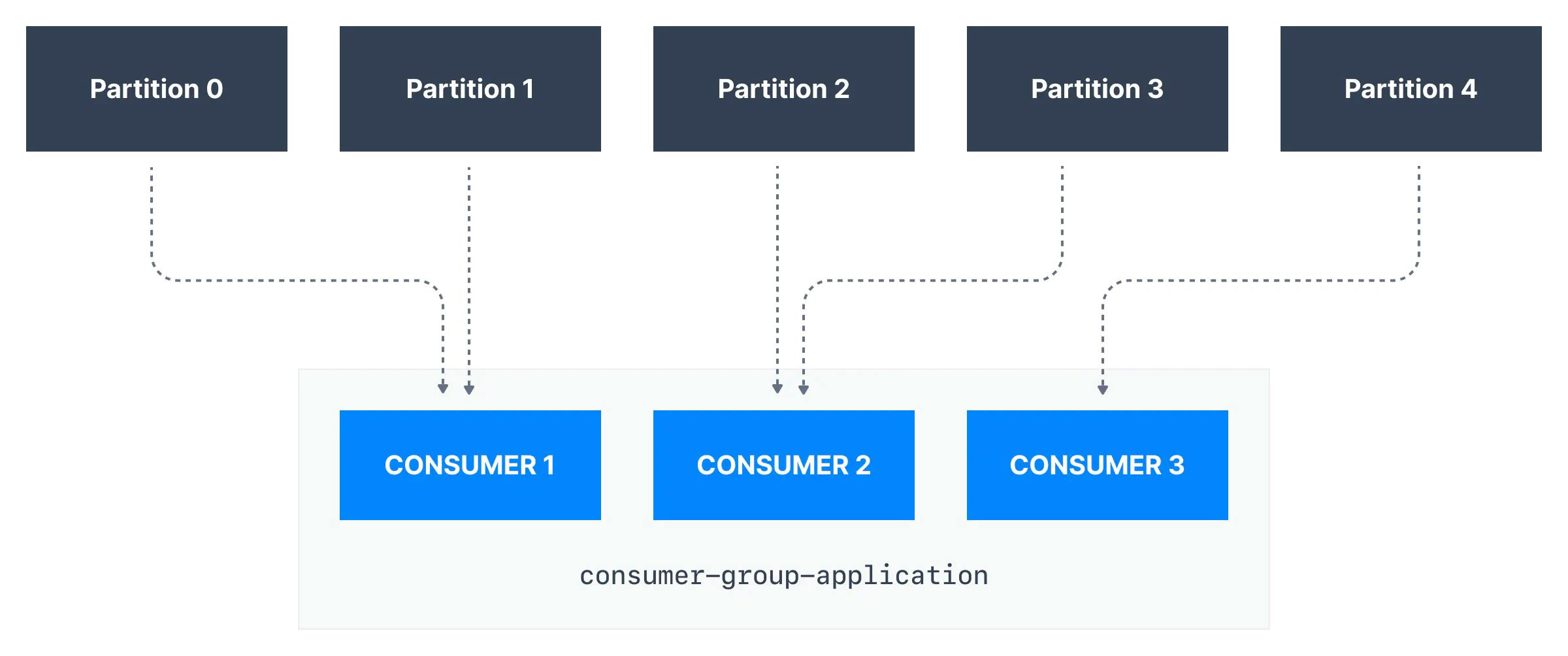

What are Consumer Groups in Kafka?

As the name suggests, a group of consumers works together to consume data from a topic. This will help us to consume data parallelly from the partitions of a topic. If one consumer fails or goes offline, the remaining consumers can continue to process the messages from the topic without any interruption, ensuring continuous data processing and reducing the risk of data loss. If there are more partitions than consumers in a consumer group, each consumer will consume messages from more than one partition, and the load will not be evenly distributed. This could lead to some consumers processing more messages than others, which may not be desirable.

How to Use Kafka in Golang

Setup Kafka using Docker image

I don't want the hassle of configuring Kafka in the local system that's why I am using the zookeeper and Kafka docker image in the container. First, create a docker-compose.yml file in the root directory of the project. Point to be noted, Zookeeper is used by Kafka brokers to determine which broker is the leader of a given partition and topic and perform leader elections. Zookeeper stores configurations for topics and permissions. Zookeeper sends notifications to Kafka in case of changes (e.g. new topic, broker dies, broker comes up, delete topics, etc.

version: '3'

services:

zookeeper:

image: confluentinc/cp-zookeeper:7.3.0

hostname: zookeeper

container_name: zookeeper

environment:

ZOOKEEPER_CLIENT_PORT: 2181

ZOOKEEPER_TICK_TIME: 2000

broker:

image: confluentinc/cp-kafka:7.3.0

container_name: broker

ports:

- "9092:9092"

depends_on:

- zookeeper

environment:

KAFKA_BROKER_ID: 1

KAFKA_ZOOKEEPER_CONNECT: 'zookeeper:2181'

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_INTERNAL:PLAINTEXT

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://localhost:9092,PLAINTEXT_INTERNAL://broker:29092

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

KAFKA_TRANSACTION_STATE_LOG_MIN_ISR: 1

KAFKA_TRANSACTION_STATE_LOG_REPLICATION_FACTOR: 1

Producer service in Golang

A producer is a service that sends data to the topic.

package main

import (

"fmt"

"log"

"os"

"time"

"github.com/confluentinc/confluent-kafka-go/kafka"

)

func main() {

// creating producer

p, err := kafka.NewProducer(&kafka.ConfigMap{

"bootstrap.servers": "localhost:9092",

"client.id": "cli",

"acks": "all"})

if err != nil {

fmt.Printf("Failed to create producer: %s\n", err)

os.Exit(1)

}

// defining the name of the topic

topic := "msg"

delivery_chan := make(chan kafka.Event, 10000)

for i := 0; i < 5; i++ {

value := fmt.Sprintf("%d msg from producer", i)

// writing message to a topic

err := p.Produce(&kafka.Message{

TopicPartition: kafka.TopicPartition{Topic: &topic, Partition: kafka.PartitionAny},

Value: []byte(value)},

delivery_chan,

)

if err != nil {

log.Println(err)

}

// this will block the execution until producing message is done.

<-delivery_chan

// to show that how it would perform if it's a time taking event.

time.Sleep(time.Second * 3)

}

}

Consumer service in Golang

A consumer is a service that receives data on the topic.

package main

import (

"fmt"

"log"

"os"

"github.com/confluentinc/confluent-kafka-go/kafka"

)

func main() {

topic := "msg"

// settting the consumer

consumer, err := kafka.NewConsumer(&kafka.ConfigMap{

"bootstrap.servers": "localhost:9092",

"group.id": "go",

"auto.offset.reset": "smallest"})

if err != nil {

log.Println(err)

}

// subscribing to the same topic as producer

err = consumer.Subscribe(topic, nil)

if err != nil {

log.Println(err)

}

for {

ev := consumer.Poll(100)

switch e := ev.(type) {

case *kafka.Message:

log.Println(string(e.Value))

// consuming message

case kafka.Error:

fmt.Fprintf(os.Stderr, "%% Error: %v\n", e)

}

}

}

Conclusion

In conclusion, Apache Kafka is a powerful distributed streaming platform that provides a scalable and reliable way to process, store, and stream real-time data. It offers several benefits over traditional messaging systems, such as high throughput, low latency, fault tolerance, and high availability.

Kafka's unique architecture, which includes topics, partitions, producers, and consumers, allows for the efficient processing of large volumes of data streams. Additionally, Kafka's support for consumer groups allows for load balancing and parallel processing, making it an ideal choice for high-throughput and low-latency use cases.

Overall, Apache Kafka is a powerful tool for processing and handling large volumes of real-time data, and its popularity is only expected to grow in the future. Whether you're building a simple application or a complex data pipeline, Kafka is definitely worth considering for your streaming needs.